Memory in the MS-DOS era

10 July 2020 - ~13 Minutes Retro Computing

Cover image credit: VintageComputer.net, Copyright Degnan, Co.

MS-DOS memory management always was confusing for teenage me. But with the hindsight of a couple of decades, it’s actually not as difficult to understand as I first thought.

In a change from my usual retro computing activities around Commodore computers, I’ve been looking again at MS-DOS. I never had a PC at home until the Windows 95 era, but I did a lot with MS-DOS during school holiday job that I had. My memories were of memory constraints that I didn’t understand and funny acronyms, along with a continue eye-rolling because the Amiga computers did all of this stuff so much better. It all seemed so unnecessarily confusing, a really awful design that had further really awful stuff dumped on top of it.

However, looking back, yes it’s complicated, but it’s perhaps more understandable than I first realised. And maybe it’s not such an awful design, but - at least in the early days - the only possible way it could have been done.

The original PC - the IBM 5150

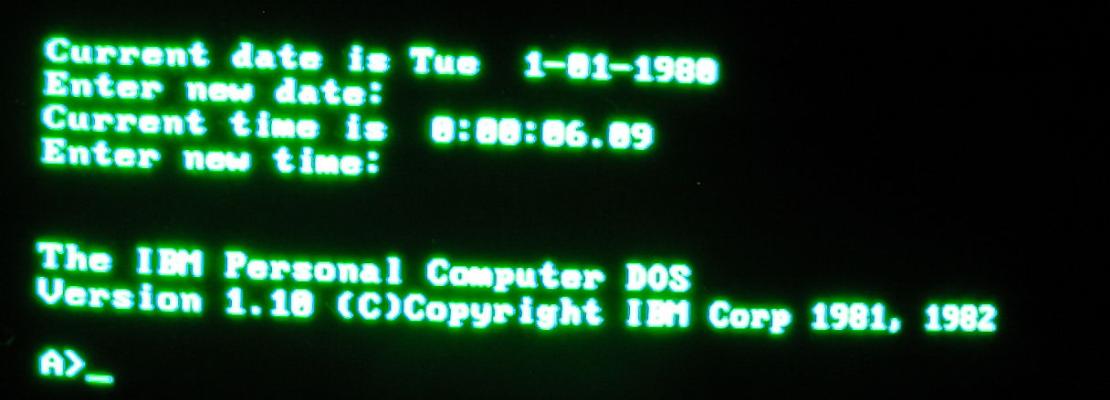

The original PC was the IBM 5150. This was based on the Intel 8088 CPU, which provided an 8-bit data bus and a 20-bit address bus. The PC could boot DOS from disk, or run “Cassette BASIC” which was on ROM.

A 20-bit address bus means a 1MiB address space. Everything that had to be memory-mapped - main RAM, video RAM, ROMs and memory-mapped IO, all had to fit into this 1MiB space. The 8088 CPU imposed some requirements on this address space - on boot it would start executing code near the end of the address space, which for most practical purposes means that the system ROM has to be at the end, right up against the 1MiB boundary.

IBM chose to dedicate two thirds of the space to RAM - from location zero up to the 640KiB boundary. The ROMs - the BIOS ROM and Cassette BASIC - were at the opposite end, finishing right at the 1MiB boundary. Inbetween was reserved for memory-mapped devices, the most obvious example being the video card, which would need to place some RAM in the memory space.

It should be stressed that there’s nothing unusual about this kind of arrangement - all computers of that era had limited address space and had to make decisions about how to divide it. IBM was also totally reasonable about the boundaries they chose. Yes, they could have reserved three quarters of the address space for RAM instead of two thirds - that would have helped in later years but even that would still be a barrier in the future. It also has to be seen that the IBM 5150 came with either 16KiB or 64KiB of memory, expandable to 256KiB - a huge margin for growth, at the time!

The space up to 640KiB later became known as conventional memory, and the space between 640KiB and 1MiB as the upper memory area or UMA.

Along with the IBM 5150 came IBM PC DOS, which was actually developed by Microsoft. In early versions, IBM PC DOS and MS-DOS are almost identical. Software developers wrote their code to work on IBM PC DOS or MS-DOS on the 5150. Little did they know that the environment they wrote their code in would survive for another 20 years - DOS and its variations was not fully superceded for consumers until the release of Windows XP in 2001.

Memory pressure

The 5150 could be brought with as little as 16 KiB of RAM and supported a maximum of 64 KiB; its successor the 5160 (the “XT”) supported up to 640 KiB. As noted above, this is the maximum amount of conventional memory - beyond this point the video adapter used the memory space, and that could not be changed without breaking compatibility.

Conventional memory was filled up with some CPU and BIOS data structures, the operating system (IBM PC DOS or MS-DOS), and device drivers. Whatever was left was a contigous space that applications could use. Newer versions of DOS would get larger as they added new features; adding device drivers further reduced the memory available to applications. Memory filled up from the “bottom” of memory (starting from memory location zero) - hardware data, then DOS, then the device drivers, then the application that the user loads, then the working memory that the application needs.

Normally an application would exit, and the memory it consumed would be freed. But DOS had an interesting feature called “terminate and stay resident”, or TSR. Invoking TSR would end the application, but would not remove it from memory. The user would be returned to DOS and could start another application. Before it invoked TSR, the application would probably hook some interrupts or wedge itself into the computer somehow so that it could still do something useful - for example, respond to serial port data (say from a mouse) or a specific keypress so that it could jump back into life.

You can see the obvious problem here - that TSR has consumed a chunk of memory that can no longer be used by any other application. Start loading multiple TSRs and your pressure on the 640 KiB of conventional memory increases.

It was clear that more memory was needed. But how to deliver this in a way that was still compatible with the original IBM 5150 and DOS architecture?

The EMS era - Expanded Memory

In between the original PC and the PC AT, there was another solution to the memory problem - EMS, or Expanded Memory Specification. It’s technique was the same as that used by contemporary 8-bit machines such as the Commodore 64 and Spectrum 128k - bank switching. A number of new RAM chips are added, but these are only visible through a smaller “window”, which EMS located in the upper memory area. Software was able to use the EMS API to control which RAM chips are selected and accessible through the window.

However this was always going to be a hack until new CPUs could support larger address spaces. This started with the Intel 80286 CPU, and most apps needing lots of memory switched to the techniques it enabled. For the few apps that still need EMS, EMM386 can emulate it without EMS hardware. EMS faded into obscurity and can be largely ignored by us.

The PC AT, the Intel 80286, and XMS

IBM’s PC AT, or model 5170, brought in the Intel 80286 CPU. This was a true 16-bit CPU and had a 24-bit address bus, meaning it could use up to 16MiB of address space. This newly-reachable memory beyond the 1MiB boundary is called extended memory.

Unfortunately, it came with a drawback. Extended memory was only accessible when the CPU was in its new protected mode. When the CPU was in its classic real mode, programs were still stuck with 1MiB of address space. Converting a program from real mode to protected mode was non-trivial - programs were written for either one mode or the other. So all existing MS-DOS apps were stuck with conventional memory only, the memory beyond 1 MiB was invisible, and only a maximum of 640 KiB below that usable.

To work around this, the Extended Memory Specification, or XMS, was developed. This was a

software API which allowed real mode programs to use extended memory. It required an XMS manager,

which in MS-DOS was provided by the HIMEM.SYS device driver. An application could allocate blocks

of memory beyond the 1MiB boundary. It couldn’t access that memory directly, but it could

ask the XMS manager to copy data between extended memory and conventional

memory. The XMS manager would switch the CPU to protected mode where it could access all memory,

copy the requested data, then reset the CPU back to real mode and resume execution. This gave

MS-DOS programs access to much larger amounts of memory, but it could only use a portion of it at a

time.

The High Memory Area

A quirk of the Intel CPUs in real mode gave rise to a strange loophole which meant that the 80286 and later, running in real mode, could access a very small area of memory - 65520 bytes - just beyond the 1MiB boundary. This tiny area of memory is called the high memory area or HMA. The XMS manager manages this piece of RAM in a special way, and MS-DOS is able to take advantage of it by relocating some of its own data structures into this area. This frees up a small amount of conventional memory.

Does this help with the pressure on conventional memory? Well, perhaps. Yes, it meant that individual applications could use much more memory than was available in the conventional memory space - but at the cost of programming overhead. The app still had to store data in conventional memory and “page” it in and out. But we still have issues with TSRs - they have to live in conventional memory (although they can also use XMS). And as our PCs become more complex, they need more device drivers, and more TSRs - for example to be a client on a network.

Conventional memory is still the bottleneck. Is there anything else that can be done?

The Intel 80386, EMM386 and LOADHIGH

The next generation of Intel CPU, the 80386, brought in more features. It was 32 bit, and supported virtual memory and paging through its new Memory Management Unit (MMU). Whilst these were mostly of interest to designers of modern OSes (such as OS/2 and Windows NT), it also offered a new virtual 8086 mode which helped MS-DOS and real mode users greatly. Using virtual 8086 mode put the CPU in a mode where all memory was accessible and the MMU was operational, but code was executing in something that looks just like real mode. This gave an option for enabling use of more memory whilst keeping that all-important backward compatibility.

It was mainly intended for multitasking. OSes such as Digital Research’s Concurrent DOS 386 allowed multiple DOS applications to run at once, each in their own virtual 8086 environment with their own 640 KiB of conventional memory. Microsoft Windows used it to allow DOS windows to be opened - each DOS windows was a virtual 8086 environment.

But DOS found a use for virtual 8086 mode in the shape of the EMM386 tool. Loaded as a device

driver, it created a virtual 8086 mode which was not used for multitasking, but to use the MMU to

provide some much-needed extra memory.

DOS clones

There wasn’t just MS-DOS. IBM had PC-DOS, Digital Research made DR-DOS (also known as OpenDOS for a

period), and there’s the newer open source FreeDOS. All of these had the same memory management

features as MS-DOS, but often with different configuration options, and the device drivers had

different names. For example, HIMEM.SYS is called HIDOS.SYS in DR-DOS.

We’ve talked about the 640 KiB of conventional memory quite a lot, but cast your memory back to the beginning of the article, and recall that there’s also 384 KiB of upper memory. So far, this has been off limits to us - the video card takes the first chunk of it, the BIOS ROM the last chunk of it, and inbetween is reserved for hardware that needs to be memory-mapped, such as network cards. However, even with a couple of hardware devices, there’s still quite a lot of space in this area which could be used by real mode applications.

EMM386 worked by enabling the MMU, and using it to map pieces of extended memory into upper

memory. The application would normally be oblivious to the fact that it was in virtual 8086 mode

instead of real mode, but suddenly chunks of space in upper memory was usable RAM. Applications

could make API calls into EMM386 and request upper memory. Unlike XMS, there was no copying of

data required - to the application, it all looked like memory under the 1 MiB limit and so was

directly addressable.

There’s usually significant amounts of unused space in the upper memory area which EMM386 can

make use of. Bits of upper memory are used for other purposes - at the very least, the video card

and the system BIOS ROMs will consume a chunk of space, and network cards and other hardware can

take up more - so the usable memory may be scattered around. A contiguous block of memory in the

upper memory area which EMM386 manages is called an upper memory block or UMB. Almost always,

there will be at least two UMBs on a typical MS-DOS system - one below the video memory and ROM,

and another inbetween the video and the system BIOS ROMs. Depending on your hardware, upper memory

may have to be split into more UMBs.

But this wasn’t the most interesting or helpful thing about EMM386. DOS still loaded applications

into conventional memory, so the increasing load of DOS, drivers and TSRs still put pressure on

conventional memory. With MS-DOS 5 came the option of having DOS load programs into upper memory.

The LOADHIGH command executed an application, but instead of locating at the lowest free memory

location, it located it in upper memory. DOS’s memory allocation routines were also able to provide

memory from upper memory. Upper memory is smaller than conventional memory, so you couldn’t use

LOADHIGH on big applications - but you could use it on TSRs and device drivers. That way you can

keep them out of conventional memory, and free up more space for applications to use.

This isn’t the easiest thing to use - for one, it will be fragmented, as you will almost always

have upper memory fragmented into two or more UMBs. Also, many TSRs use more memory while they are

initialising, then free some of that memory before they terminate and stay resident. This means

that you have two variables to consider - how much memory does the resident TSR need while it is

initialising and how much it needs once it is resident. Just because a TSR is 20 KiB when resident

doesn’t guarantee that you can get LOADHIGH to run it in a 20 KiB UMB and it will succeed. Once

you’ve told LOADHIGH to load a program in an UMB, if it uses DOS memory allocation functions, it

will come out of the same UMB.

Therefore you end up in a puzzle - sometimes a simplistic approach of prefixing every TSR with

LOADHIGH will work (by default, LOADHIGH selects the UMD with the most free space), but may not

be optimal. LOADHIGH supports extra options which gives more control over which UMBs are

available to an app. Manual optimisation quickly gets very difficult, but tools like MEMMAKER

automate the discovery and optimisation process and pack more into upper memory.

Conclusion

The restrictions on memory only exist in real mode (or virtual 8086 mode). If you write programs that run in protected mode, this whole class of problem goes away - and if you write for Windows or other multi-tasking OSes, you will be using protected mode already.

Of course, with “backward compatibility” such a huge part of the PC compatible world, you can’t just hand-wave away a decade or more of real-mode software, so you need solutions.

PC memory management in MS-DOS always bothered me, when it wasn’t clear what was going on or why. But with hindsight, and proper knowledge of these technologies, it is easier to understand, and the solutions are actually quite reasonable to make the best of a difficult situation.

About the author

Richard Downer is a software engineer turned cloud solutions architect, specialising in AWS, and based in Scotland. Richard's interest in technology extends to retro computing and amateur hardware hacking with Raspberry Pi and FPGA.